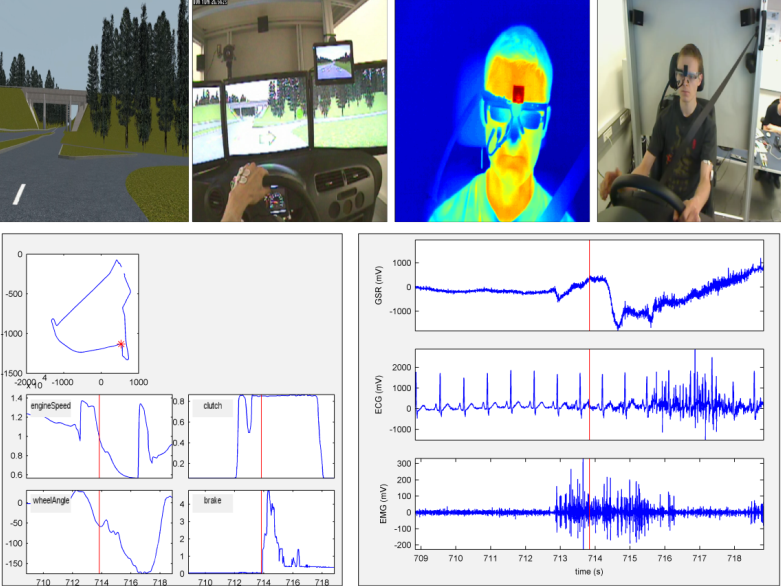

Driving simulator environment

The driving simulator environment is developed in-house. Realistic cabin is constructed on a motion platform and equipped with real pedals, gearshift and steering wheel with force feedback. Simulator software (SCANeR Studio, AVSimulation) is used to create realistic scenarios of various traffic situations. Virtual environment can be shown on screens or via virtual reality headset. Surround sound system and darkened room is used ensure immersive experience. Motion platform simulates g-forces at the accelerations, decelerations and curved during driving.

In the simulator enviroment various biosignals, such as EMG, ECG and galvanic skin response (GSR) can be measured. In addition, driver’s eye movements can be tracked. Furthermore, thermal imaging can be used to detect temperature changes on driver’s face. In a pilot study, a gourp of professional and non-professional elderly drivers were measured at simulator. Differences were observed in eye movements and use of gear stick between the groups.

Kinematics of the driver can be measured using wearable IMUs, joint angles from ankle to neck and wrist can be monitored.

Two different eye tracking systems are available a wearable system (Arrington Research) and a remote two-camera system Smart Eye XO. The Smart Eye system enabled tracking of gaze on objects of the simulation environment.

By combining the measurements and experience of the group in signal analysis driver’s physiological state can be monitored.